When designing AI systems in 2025, model flexibility and infrastructure agility are no longer nice-to-haves. They’re core requirements

The Problem: Many providers, many more models.

Every AI developer has been there. You start with OpenAI’s GPT-4, then realize you probably need Anthropic’s Claude, Google’s Gemini, and maybe some other model for cost optimization. Suddenly, you’re managing:

- Multiple API keys and authentication systems

- Different SDK formats and request structures

- Varying rate limits and billing systems

- Provider-specific error handling

- Inconsistent response formats

But the real pain comes when you hit rate limits on a freshly created API account. OpenAI’s new accounts often start with restrictive limits, forcing you to either wait or use alternatives.

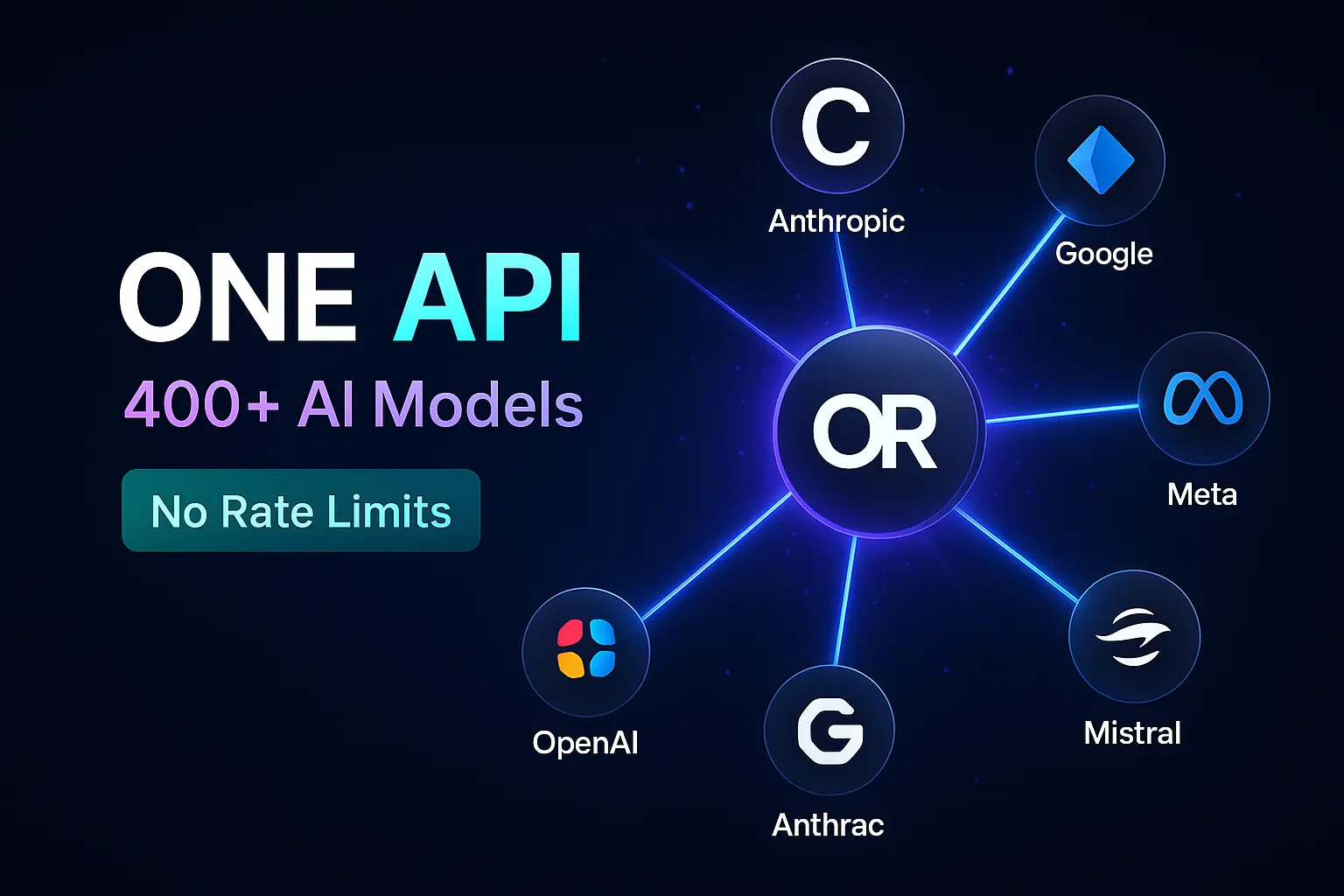

The OpenRouter Solution

OpenRouter solves this by providing a unified API interface that abstracts away provider complexity while offering significantly higher usage limits.

Key Benefits:

- Single API for 400+ models: Access GPT-4, Claude, Gemini, Llama, and hundreds more through one endpoint

- Higher effective rate limits: Bypass individual provider restrictions through smart routing

- Cost optimization: Automatic routing to the most cost-effective providers

- Zero vendor lock-in: Switch models without changing your code

- 100% uptime: Automatic failover when providers go down

- OpenAI-compatible format: Drop-in replacement for existing OpenAI integrations

Getting Started with OpenRouter

You can use OpenRouter directly through their API:

import requests

import json

response = requests.post(

url="https://openrouter.ai/api/v1/chat/completions",

headers={

"Authorization": "Bearer <OPENROUTER_API_KEY>",

},

data=json.dumps({

"model": "openai/gpt-4.1",

"messages": [

{

"role": "user",

"content": "How to use OpenRouter?"

}

]

})

)or access it from OpenAI SDK:

# Run command: pip install -U openai

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="<OPENROUTER_API_KEY>",

)

completion = client.chat.completions.create(

model="openai/gpt-4.1",

messages=[

{

"role": "user",

"content": "How to use OpenRouter?"

}

]

)

print(completion.choices[0].message.content)

Cost optimizations

Now let’s see in action what advantage we gain by using unified API.

Let’s assume that AI has to handle tasks that differ in complexity. Answering stupidly simple questions or basic classification can be done with tiny models, so for those tasks we can use cheap models, so as to minimize the cost.

import asyncio

from typing import List, Dict

# Updated models by cost tier for 2025

COST_TIERS = {

"budget": [

"meta-llama/llama-3.2-3b-instruct",

"google/gemma-3n-e2b-it",

"mistralai/ministral-3b"

],

"balanced": [

"openai/gpt-4o-mini",

"anthropic/claude-3.5-haiku",

"google/gemini-2.5-flash"

],

"premium": [

"openai/gpt-4o",

"anthropic/claude-3.7-sonnet",

"google/gemini-2.5-pro"

]

}

async def cost_optimized_completion(

message: str,

complexity: str = "balanced",

max_tokens: int = 1000

):

"""

Select model based on task complexity and cost requirements

"""

models = COST_TIERS.get(complexity, COST_TIERS["balanced"])

for model in models:

try:

response = client.chat.completions.create(

model=model,

messages=[{"role": "user", "content": message}],

max_tokens=max_tokens

)

return {

"response": response.choices[0].message.content,

"model": model,

"tier": complexity

}

except Exception as e:

print(f"Model {model} failed: {e}")

continue

raise Exception("All models in tier failed")

# Usage examples

simple_task = await cost_optimized_completion(

"What's 2+2?",

complexity="budget"

)

complex_task = await cost_optimized_completion(

"Write a comprehensive analysis of quantum computing's impact on cryptography",

complexity="premium"

)

Web Search

OpenRouter API allows you to use web search in your calls by simply adding suffix :online

{

"model": "openai/gpt-4o:online"

}Web search results for all models follow the same annotation schema:

{

"message": {

"role": "assistant",

"content": "Here's the latest news I found: ...",

"annotations": [

{

"type": "url_citation",

"url_citation": {

"url": "https://www.example.com/web-search-result",

"title": "Title of the web search result",

"content": "Content of the web search result", // Added by OpenRouter if available

"start_index": 100, // The index of the first character of the URL citation in the message.

"end_index": 200 // The index of the last character of the URL citation in the message.

}

}

]

}

}Conclusion

OpenRouter transforms AI development by eliminating the complexity of multi-provider integration while providing superior rate limits, cost optimization, and reliability. The unified API approach means you can:

- Start faster: No need to set up multiple provider accounts and SDKs

- Scale better: Higher effective rate limits through intelligent routing

- Cost less: Automatic optimization for price and performance

- Stay flexible: Easy model switching without code changes

- Build reliably: 100% uptime through automatic failover

Whether you’re building a simple chatbot or a complex AI application, OpenRouter provides the foundation you need to succeed in the rapidly evolving AI landscape. The examples shown here demonstrate just a fraction of what’s possible when you have unified access to the world’s best AI models.

Kamil Kwapisz