Selenium isn’t just for automation; it can also be used for web scraping. In this article, I’ll show you how to do web scraping with Selenium.

When Should You Use Selenium for Web Scraping?

While HTTP is the default protocol for the web, many modern websites rely heavily on JavaScript. This means a simple HTTP request may not be enough to extract content.

It’s super easy to detect if browser was used or it was just simple GET request. Of course, there are sites which allow you NOT to use JS, but even those websites may get suspicious about your activity.

Dynamic Content Handling

Selenium is one solution to this problem (among a few), as it allows you to remotely control a real browser—the same one you use when browsing your favorite pages.

When using Selenium (or any similar tool) you can easily:

- click on elements,

- fill forms and inputs,

- move mouse, drag&drop stuff,

- wait for elements to appear after loading,

- access content that only appears after scrolling or clicking,

- interact with dropdown menus, sliders, and other complex UI elements.

Bypassing Anti-Scraping

It’s common for big websites to use anti-scraping services.

Some anti-scraping measures are difficult to bypass and require a high-quality IP, proper browser and TLS fingerprinting, and maintaining a human-like browsing pattern

However, when scraping some easier websites, Selenium may help with bypassing those anti-scraping systems.

The easiest anti-scraping measure to bypass with Selenium is one that detects the user agent and disables JavaScript execution When you’re using recent browser with Selenium, you have pretty high chances succeeding with Selenium.

Selenium may be helpful also against more sophisticated systems. It’s good to use some of the popular chrome options to increase chances of getting through anti-scraping systems.

Getting Started with Selenium for Web Scraping

Let’s finally get to the scraping! 🙂 For this post, we’ll be using Python.

Let’s start with installation via pip:

pip install seleniumInitializing browser

To start scraping, we need to initialize a web browser and navigate to a specific URL. But let’s add some chrome options to improve our scraper from the very beginning.

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument('--start-maximized')

chrome_options.add_argument('--disable-infobars')

chrome_options.add_argument('--enable-javascript')

chrome_options.add_experimental_option("useAutomationExtension", False)

chrome_options.add_experimental_option("excludeSwitches", ["enable-automation"])

driver = webdriver.Chrome(options=chrome_options)

URL = "https://stackoverflow.com/questions"

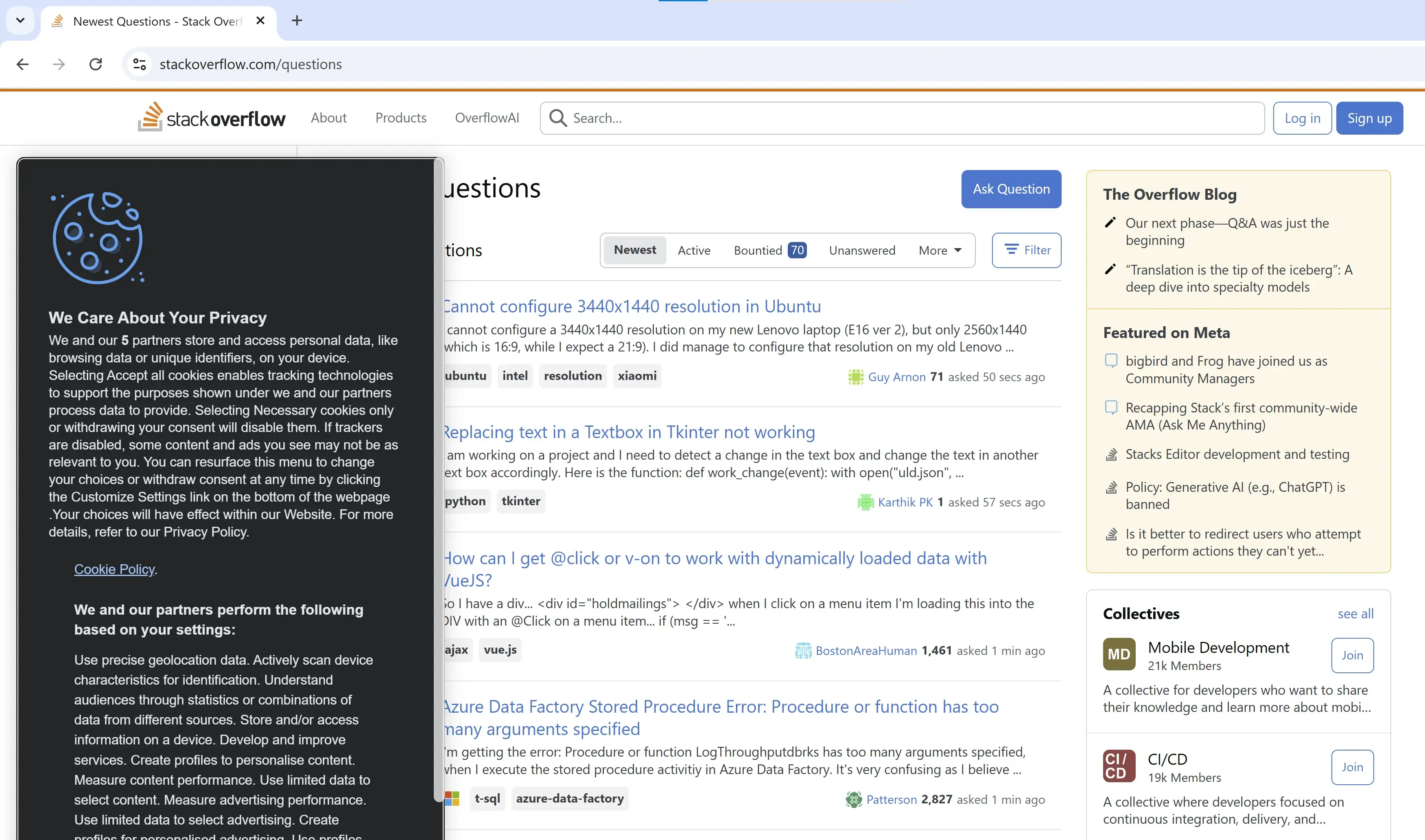

driver.get(URL)You should be able to see Stackoverflow page, just like this:

Attention! If you’ll face issues with webdriver, check out this external library: WebdriverManager

Locating elements

The primary activity of a web scraper is locating elements on a page.

In Selenium, there are few pretty useful ways to do that:

from selenium.webdriver.common.by import By

driver.find_element(By.ID, "username")

driver.find_element(By.NAME, "email")

driver.find_element(By.XPATH, '//button[text()="Submit"]')

driver.find_element(By.LINK_TEXT, "Forgot Password?")

driver.find_element(By.PARTIAL_LINK_TEXT, "Sign up")

driver.find_element(By.TAG_NAME, "input")

driver.find_element(By.CLASS_NAME, "form-control")

driver.find_element(By.CSS_SELECTOR, "div.container > form input[type='text']")

You can read more about it in official Python+Selenium documentation.

Using buttons

When we know how to locate element, let’s locate one and click it!

We definetely need to click accepting cookies banner:

driver = webdriver.Chrome(options=chrome_options)

URL = "https://stackoverflow.com/questions"

driver.get(URL)

driver.find_element(By.CSS_SELECTOR, "#onetrust-accept-btn-handler").click()If you run this code, you ended up with weird error message like this:

selenium.common.exceptions.NoSuchElementException: Message: no such element: Unable to locate element: {"method":"css selector","selector":"#onetrust-accept-btn-handler"}

(Session info: chrome=133.0.6943.142); For documentation on this error, please visit: https://www.selenium.dev/documentation/webdriver/troubleshooting/errors#no-such-element-exception

Stacktrace:

GetHandleVerifier [0x00007FF62E08C6A5+28789]

(No symbol) [0x00007FF62DFF5B20]

[...]It’s because website was not fully loaded. We tried to click button immediately after instruction to go to the URL, but server need some time to respond and JS must be fully loaded.

So let’s add a way to wait before clicking the button.

Waiting for elements

There are two main ways to wait for an element: implicit and explicit waits.

Implicit wait is a method to directly instruct your code to wait for X seconds before next action will be tried. You can do this by using typical time.sleep or Selenium interface:

driver.implicitly_wait(10)

# or

driver.set_page_load_timeout(10)

# or

from time import sleep

sleep(10)This method is not so good for assuring element is loaded before perfoming action, although it may work to generally slow down execution of scraper or to mimic typical users’ waits.

Better way to wait for element is to explicitly wait for element to meet one of the requirements:

title_is

title_contains

presence_of_element_located

visibility_of_element_located

visibility_of

presence_of_all_elements_located

text_to_be_present_in_element

text_to_be_present_in_element_value

frame_to_be_available_and_switch_to_it

invisibility_of_element_located

element_to_be_clickable

staleness_of

element_to_be_selected

element_located_to_be_selected

element_selection_state_to_be

element_located_selection_state_to_be

alert_is_presentYou can read more about this in Waits’ documentation.

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.wait import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.webdriver.chrome.options import Options

from selenium.common.exceptions import TimeoutException

[...]

try:

button = WebDriverWait(driver, 10).until(

EC.element_to_be_clickable(

(By.CSS_SELECTOR, "#onetrust-accept-btn-handler")

)

)

button.click()

except TimeoutException:

print("Timed out waiting button")

That way we’re waiting until this particular element is okay to click on (up to 10 sec), which ensure that our scraper won’t fail because of too slow loading.

Text input

Now let’s send some text to the web and search for some stuff.

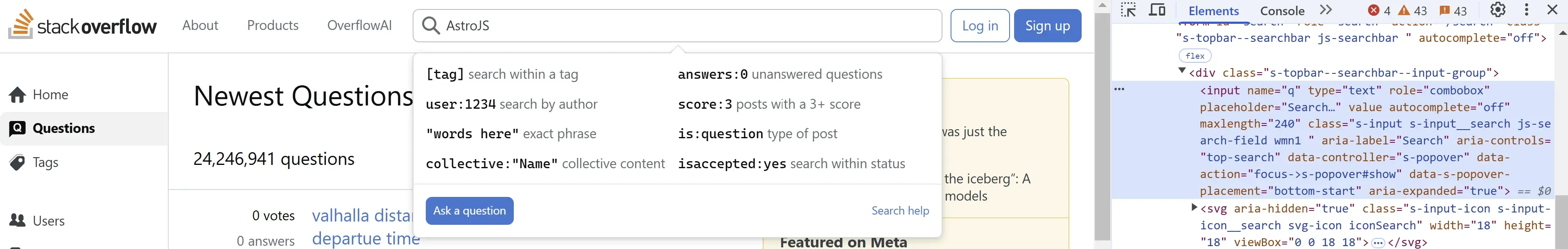

Firstly we located main search field and then sent some text:

search_input = driver.find_element(By.CSS_SELECTOR, "input[name='q']")

search_input.send_keys("AstroJS")so you should see:

As you can see, this input filling feels pretty robotic, as it has instantly filled entire word. To feel more human like, we can add random delays between putting next letter.

import time

import random

search_input = driver.find_element(By.CSS_SELECTOR, "input[name='q']")

query = "AstroJS"

for letter in query:

search_input.send_keys(letter)

time.sleep(random.uniform(0.1, 0.3)) # Random delay between 100ms and 300ms

Keyboard click simulation

Now we can send this search form by clicking ENTER, same as we would do.

from selenium.webdriver.common.keys import Keys

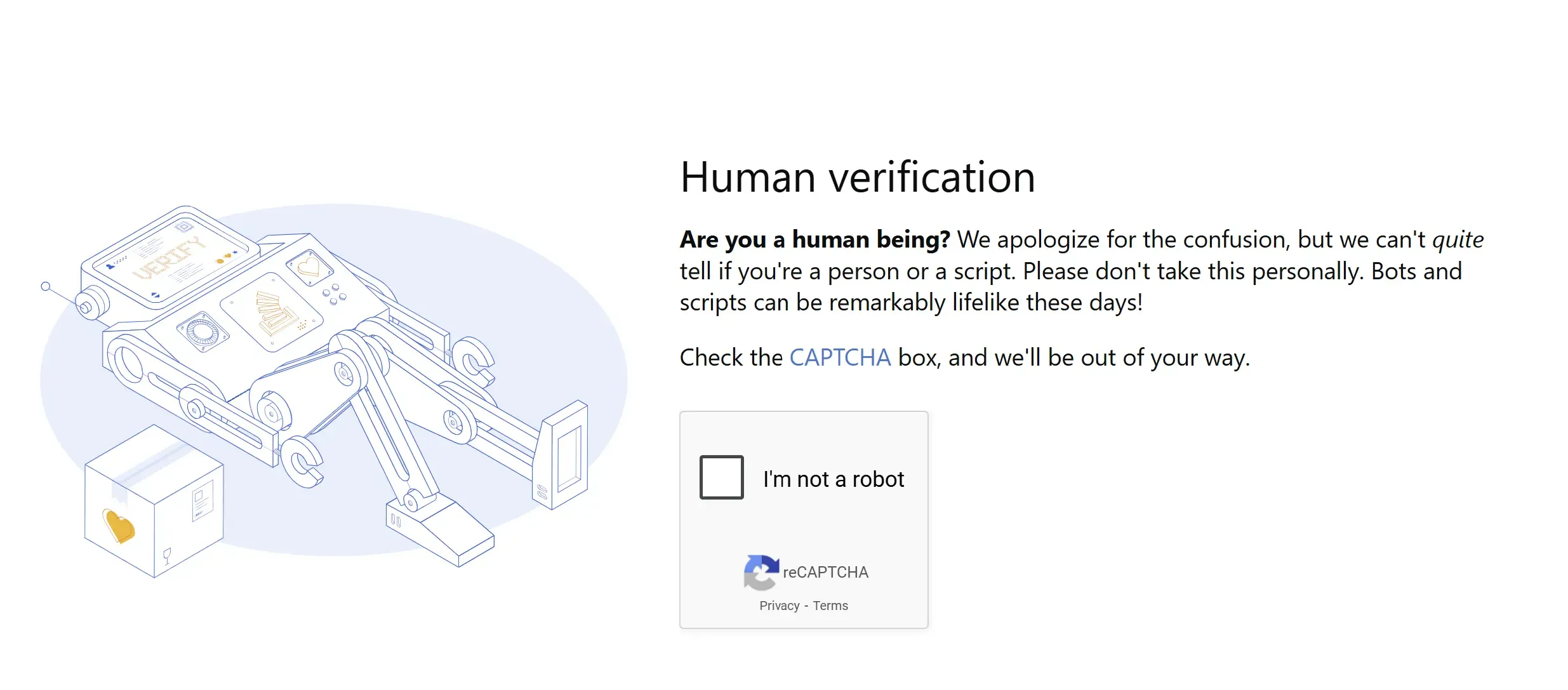

search_input.send_keys(Keys.ENTER)Your seach form should be sent and you’ll probably see CAPTCHA screen:

I won’t cover how to solve this captcha, because it will be too much for this article.

Basic Scraping with Python Selenium

However, let’s back to our starting URL and do some scraping.

for post_div in driver.find_elements(By.CLASS_NAME, "s-post-summary--content"):

href = post_div.find_element(By.CSS_SELECTOR, "h3 > a").get_attribute("href")

title = post_div.find_element(By.CSS_SELECTOR, "h3 > a").text

print(href, title)In this code, we firstly found all elements (PLURAL!) with class "s-post-summary--content", which are divs with all post details. Then we iterated over them.

For each element we extracted link to post and its title.

Web Scraping with Selenium – Disadvantages

Selenium is a somewhat old-fashioned solution and, compared to other web automation tools, it lacks some essential features.

Speed of execution

Selenium is slow.

It’s pretty obvious, that scraping with real browser will be slower than with HTTP requests only, because we have to wait not only for server, but also all JS to be loaded and executed. And sometimes the loading is intentionally slowered down.

But it won’t be the issue, if Selenium was efficient, but it’s not. Selenium is like a huge and advanced machine, that need a lot of time and fuel to start up, and it’s not so swift nor handy to use.

However, in web scraping it’s generally good idea to slow things down to make it more human-like, so this drawback may not be that problematic as it sound.

Proxies

Many sites protect themselves from scraping by blocking IP addresses. That’s why scraping very often involves using proxies.

And here, Selenium has a huge problem.

Selenium doesn’t natively support proxy authorization that requires a username and password.

It’s pretty easy to set up proxy access:

from selenium import webdriver

from selenium.webdriver.common.proxy import Proxy, ProxyType

prox = Proxy()

prox.proxy_type = ProxyType.MANUAL

prox.http_proxy = "ip_addr:port"

prox.socks_proxy = "ip_addr:port"

prox.ssl_proxy = "ip_addr:port"

capabilities = webdriver.DesiredCapabilities.CHROME

prox.add_to_capabilities(capabilities)

driver = webdriver.Chrome(desired_capabilities=capabilities)This approach will work if proxy supports IP whitelisting authentication.

However if proxy requires user:password, there is no easy built-in method to do this. To solve this issue, you can use external library (I don’t know any good one to recommend), or use this pretty well-known hack with proxy auth done with tiny plugin built before spawnining browser:

import zipfile

from pathlib import Path

from selenium import webdriver

PROXY = {

"HOST": "proxy-host",

"PORT": 8080,

"USER": "proxy-user",

"PASS": "proxy-password"

}

def create_proxy_extension(proxy):

"""Create a Chrome extension for proxy authentication"""

manifest_json = {

"version": "1.0.0",

"manifest_version": 2,

"name": "Chrome Proxy",

"permissions": [

"proxy", "tabs", "unlimitedStorage", "storage",

"<all_urls>", "webRequest", "webRequestBlocking"

],

"background": {

"scripts": ["background.js"]

},

"minimum_chrome_version": "22.0.0"

}

background_js = f"""

var config = {{

mode: "fixed_servers",

rules: {{

singleProxy: {{

scheme: "http",

host: "{proxy['HOST']}",

port: {proxy['PORT']}

}},

bypassList: ["localhost"]

}}

}};

chrome.proxy.settings.set({{value: config, scope: "regular"}}, function() {{}});

function callbackFn(details) {{

return {{

authCredentials: {{

username: "{proxy['USER']}",

password: "{proxy['PASS']}"

}}

}};

}}

chrome.webRequest.onAuthRequired.addListener(

callbackFn,

{{urls: ["<all_urls>"]}},

['blocking']

);

"""

plugin_file = 'proxy_auth_plugin.zip'

with zipfile.ZipFile(plugin_file, 'w') as zp:

zp.writestr("manifest.json", str(manifest_json).replace("'", '"'))

zp.writestr("background.js", background_js)

return plugin_file

def get_chromedriver(use_proxy=False, user_agent=None):

"""Configure and return a Chrome WebDriver instance"""

options = webdriver.ChromeOptions()

if use_proxy:

plugin_file = create_proxy_extension(PROXY)

options.add_extension(plugin_file)

if user_agent:

options.add_argument(f'--user-agent={user_agent}')

driver_path = Path(__file__).parent / 'chromedriver'

return webdriver.Chrome(executable_path=str(driver_path), options=options)

Poor network data interface

Back then web automation and web scraping with browser was easier and more straightforward. As websites were mostly static, network inspecting and manipulation weren’t that necessary to have.

Now, having a good interface to explore network operations—just like in Chrome DevTools—is essential.

And sometimes to good to have ability to filter requests or run functions on them.

In Selenium we can do this that way:

from selenium import webdriver

# Enabling Network Logging

chrome_options = webdriver.ChromeOptions()

chrome_options.set_capability('goog:loggingPrefs', {'performance': 'ALL'})

driver = webdriver.Chrome(options=chrome_options)And after visiting page and performing action we can browse and parse logs from network:

log_entries = driver.get_log("performance")

for entry in log_entries:

message = json.loads(entry['message'])['message']

if message['method'] == 'Network.responseReceived':

url = message['params']['response']['url']

status = message['params']['response']['status']

print(f"Received {status} response from {url}")However request filtering capabilities in Selenium are very limited and in order to extend them, you’ll have to use additional proxy server, while in Playwright it’s built in.

In general, network manipulation in Playwright is much easier and pleasure to use, being also the more optimized one.

Using Selenium is not a blockage when you need network interception, but it may be much more challanging.

Lack of unified interface for browsers

Selenium allows using different browser engines, however the interface is not unified.

Switching from one browser to another may result in compatiblity issues. Some things that was working on one browser may be failing on other.

Low Performance

And finally, Selenium isn’t optimized for web scraping. When scraping with this tool, we’re using an actual browser application, which is much less efficient than fetching page content using only HTTP requests.

Using Selenium also leads to higher resource consumption during scraping.

Summary

So, you really have everything you need to scrape in Selenium. Selenium isn’t a tool designed for scraping, but feel free to go ahead and give it a try if you want to scrape using it 🙂

However, I’d like to add a digression here about the future of web scraping.

Tools designed to prevent web scraping are becoming increasingly effective. For now, proxies are a great way to bypass this blocking, but it’s a rather expensive solution.

Another problem is captchas, which are triggered when behavior that deviates from normal human use of the site is detected.

Browser-based tools that support JavaScript execution and image rendering are becoming essential.

I think their importance will continue to grow, forcing scrapers to give up scraping speed in favor of keeping up appearances and pretending to be a real user of the site.

Then the skill of “web scraping with Selenium” might turn out to be very useful! 🙂

“Although there are faster and lighter alternatives like Playwright, Puppeteer, and Splash, Selenium remains one of the most popular choices! 🙂”

Thanks for making it to the end!

If you have any more questions about web scraping in Selenium, or web scraping or automation in general, feel free to ask!

Best regards! Kamil Kwapisz