I never thought I’d see the day when AI would be used to fight AI. But that’s exactly what happened last month.

On March 19, Cloudflare introduced AI Labyrinth - a new tool to silently fight back against web scrapers.

But let’s start from the beginning.

Antibot Systems vs Web Scrapers

First, we need to understand the arms race between antibot systems and web scrapers.

As there is a ton of useful data on the internet, many companies want to scrape it so they can leverage it. However, this is often not approved by site owners.

Why Are Web Scrapers Being Blocked?

There are a few reasons why websites block scraping bots:

- Protection of intellectual property,

- Protection of private data (GDPR compliance, etc.),

- Fraud prevention,

- Increased server load due to bots’ activity.

And this last reason was crucial for building AI Labyrinth.

The AI Revolution Strikes the Web Scraping Industry

AI companies were revolutionary not only in their general usage of artificial intelligence but also in web scraping. They entered the web scraping arena with enormous budgets because data is like fuel to LLMs.

And these companies are interested in ALL kinds of data. E-commerce, blogs, newspaper portals, images, podcasts – virtually everything imaginable is a target for these companies.

This caused problems for websites, which were effectively acting like a DDoS attack due to the millions of requests sent from the servers of the biggest AI companies.

What is Cloudflare?

Cloudflare is a global cloud services provider focused on improving the security, performance, and reliability of websites and applications.

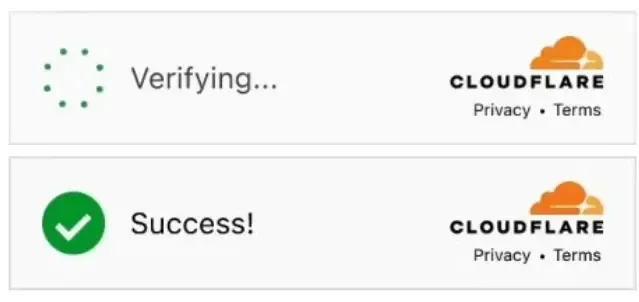

One of their most popular products is the Web Application Firewall (WAF) to secure websites from DDoS attacks, and the Bot Management service for blocking bots. A key feature for blocking bots is Cloudflare Turnstile - their Captcha solution.

What is AI Labyrinth?

According to the Cloudflare blog post, AI Labyrinth is a mitigation approach that uses AI-generated content to slow down, confuse, and waste the resources of AI Crawlers and other bots that don’t respect “no crawl” directives.

How Does AI Labyrinth Work?

The first step in the process is to detect if a user accessing the site is a bot or a real human being. This is something Cloudflare has been doing for a long time. Before creating this Labyrinth feature, suspicious users would see a CAPTCHA (Cloudflare Turnstile) to verify if they were bots or simply humans with unusual browser or proxy configurations.

With this new feature, instead of a captcha, Cloudflare can present this suspicious visitor with a next-generation honeypot.

AI Labyrinth creates a honeypot that is a set of links to “fake” pages generated with AI.

Yes, you understood it correctly. They are using AI to fight AI.

According to Cloudflare, these fake pages are pre-generated and are displayed only when user activity is suspicious. Furthermore, links to fake pages should not be visible to real users and, with properly crafted metatags, they are designed not to be indexed by search engines like Google.

Because of this set of internally linked fake pages, bots will end up crawling through them, losing money and time downloading irrelevant content rather than the expected data.

Moreover, it will be very easy to spot bots because only bots will visit these fake sites.

How Will AI Labyrinth Impact Web Crawling?

Antibot systems from Cloudflare and other companies have existed for quite some time, but web scrapers continue to win the arms race and effectively scrape data.

However, AI Labyrinth is quite disruptive because it can be completely silent initially. Usually, when scraping a Cloudflare-protected website, you got instant feedback on whether you were treated like a bot or not because you would see a captcha. If you saw it, you knew your scraper needed adjustments. Feedback was instant.

With Labyrinth turned on, web scrapers may not be aware they’ve been detected.

This kind of solution may be considered revolutionary because, before, honeypots were often considered risky. Google stated that creating invisible links is a black-hat SEO technique and could lead to penalties, so not every site was willing to take the risk. However, I guess if such a large company implements this, Google might view this differently.

Will it Really Impact Web Scraping?

For some cases, yes, of course, but mostly… no. And here’s why:

- Cloudflare bot protection likely uses the same algorithms as before. If a web scraper was able to get data from a Cloudflare-protected site before, it likely will still be able to do so.

- If links are not visible to humans, they have to be styled in a certain way that can be checked programmatically before visiting these links. I’ll take a guess that most scrapers will fall for this trap initially, but eventually, solutions for spotting fake links will be publicly available.

- Web drivers can’t interact with invisible elements, so each crawler using JS rendering should be safe to go against AI labirynth. And nowadays, a lot of scrapers uses real browsers in order to fetch dynamically rendered content.

- Additional links to scrape won’t significantly impact the budget of AI companies. Of course, scraping every single URL, image, and stylesheet incurs costs, although with such a huge number of requests, it probably won’t be a significant financial burden. Especially once they understand how to detect fake links. And let’s be honest, not all links will be faked.

- Cloudflare can’t ban every suspicious user. That’s why web scrapers are still winning the arms race. You could present challenges and other irritating stuff to all users, but you will also irritate your real users, and nobody wants to do so. The harder a site is for bots, the harder it can be for human users because, in most cases, bot detection systems can’t be sure if a user is a bot or not.

- And lastly, even without AI Labyrinth, there were many AI-generated content pages. That’s the world we live in. Websites are generating content with AI that is often completely useless, so perhaps the content generated by Cloudflare won’t stand out significantly :)

In my opinion, this new feature will, as is often the case, make web scraping harder for less experienced developers, while allowing professionals to raise prices.

Impact on AI Companies and LLMs

But will it effectively impact LLM providers? As I said before, I think developers from AI companies will quickly understand how to avoid this AI Labyrinth (or maybe they already did… 😉).

However, it can have a significant impact on those firms. Definitely, they should be ready for a budget increase, both for scraping infrastructure costs and developers’ salaries.

It is also very important to quickly learn how to spot this fake content to ensure it won’t be used to train models, because in the data science world, trash in = trash out. But this is a challenge they’ve faced from the beginning of their existence, so they are likely well-prepared.

Best regards!